Background

In the past, Windows Server Failover Clustering (WSFC) cluster only monitored the health of your entire Always On Availability Groups (AO AG) primary replica. This meant that even when your database wasn’t usable by your application because it, for example, lost the ability to write transactions, no automatic failover was triggered. In SQL Server 2016 a feature called Enhanced Database Failover was introduced. It added two of the most basic checks which monitor if i) a database placed inside AOAG is in ONLINE state and if ii) all of its files are in readable / writable (which is checked only if read or write operation is actually performed). It still wasn’t satisfying enough because even when this feature was combined with a flexible failover policy (which is still NOT database aware) further disaster scenarios could leave your database in an unusable state without any attempts to automatically failover to the D/R site.

As a result, this summer Microsoft quietly changed a behavior for how database health is monitored. They added some additional checks against the database which should help to evaluate if it remains in a healthy state. These checks are:

- 605 – Page or allocation corruption.

- 823 – Checkpoint failures.

- 829 – Disk corruption.

- 832 – Hardware or memory corruption.

- 1101 – No disk space available in a filegroup.

- 1105 – No disk space available in a filegroup.

- 5102 – Missing filegroup ID requests.

- 5180 – Wrong file ID requests.

- 5515 – Filestream directory cannot be accessed

- 5534 – Log corruption due to FILESTREAM operation log record.

- 5535 – FILESTREAM data container corruption.

- 9004 Log Corruption

Source: SQL Server Team Blog

When a health check routine is run and notices one of these errors, it saves it and compares it with the results of two additional runs. If all of them contain the same error, a failover is triggered.

So far it all sounds just great but unfortunately, this is not always the case.

Issue

We have encountered the issue with new database health checks when our customer informed us that their application is not able to connect to the Availability Group listener. We were informed that it has most likely something to do with the fact that the database has reached its MAXSIZE. Unfortunately, this setting couldn’t be changed because the database was inaccessible.

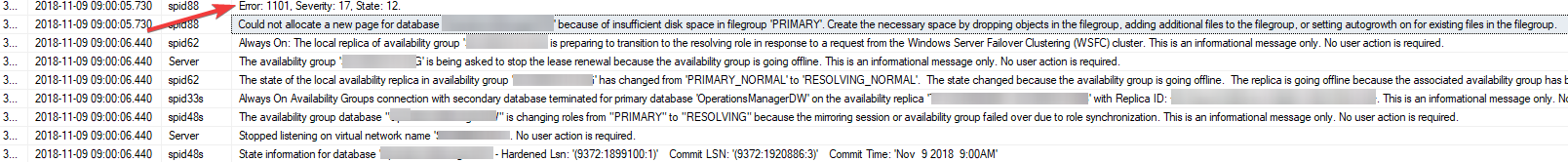

When we connected to the environment, we saw several errors 1101:

Could not allocate a new page for database ‘XXXXX’ because of insufficient disk space in filegroup ‘PRIMARY’. Create the necessary space by dropping objects in the filegroup, adding additional files to the filegroup, or setting autogrowth on for existing files in the filegroup.

Since Error 1101 is now monitored by a health check routine, it caused a failure of the whole AO AG (DB_FAILOVER option was enabled). As a consequence, the Availability Group ended up in RESOLVING state from SQL Server perspective and in FAILED state from WSFC perspective.

As a side effect, it also caused the database to not be accessible by T-SQL. Therefore, MAXSIZE couldn’t be increased nor could DB_FAILOVER option be turned off.

Solution

One of the restrictions which MS gives regarding AO AG is “Don’t use WSFC Failover Cluster Manager“. Although there are very good reasons why you shouldn’t do that, in this case we had to go exactly there and put the cluster resource for this AOAG manually online.

While the client application wasn’t turned off / blocked and still tried to (unsuccessfully) continuously write to the database, we had about 30 seconds to modify MAXSIZE for the affected database or to turn DB_FAILOVER option for the Availability Group off. Once it was done, the issue disappeared.

Later we have realized that the command:

ALTER AVAILABILITY GROUP xxx FAILOVER

would also help, if we run it on primary replica.

Summary

The key takeaway from this incident is that you shouldn’t ever place any database with configured MAXSIZE to the AO AG with DB_FAILOVER option enabled. Otherwise you are risking an outage not only of this specific database but of all databases placed inside the same Availability Group. In addition to that you need to be aware that if it happens, you have to manually run FAILOVER command via T-SQL or put WSFC Resource for the affected AO AG to the ONLINE state. The WSFC with the default policy won’t do it automatically sooner than in 6 hours (and if your app is still trying to write more data it would go down again in 30 seconds).

I personally find this behavior very unfortunate. I am not sure if Microsoft realized that error 1101 can be encountered in two scenarios:

- A database filegroup can’t grow because a drive(s) is/are out of space

- You configured a MAXSIZE quota

While I can imagine how beneficial DB_FAILOVER caused by this error can be in the first case I can hardly find any case when it could help in the second case.

It’s worth mentioning that Database Level Health Detection is still not default for SQL Server Always On Availability Groups. On the other hand, once you enable it, then CU9 for 2017 release and CU2 for 2016 SP2 release (CU10 for 2016 SP1) will introduce this behavior. The only way how to get rid of it is to disable all additional health checks by TF 9576.

Repro script:

–You should already have configure AO AG on Server A and Server B

–Sever A

CREATE DATABASE [MaxSizeDB]

GO

ALTER DATABASE [MaxSizeDB] MODIFY FILE (NAME = N’MaxSizeDB’, MAXSIZE = 8192KB )

GO

BACKUP DATABASE MaxSizeDB TO DISK = ‘NUL’

GO

CREATE AVAILABILITY GROUP [AGTest]

WITH (DB_FAILOVER = ON) FOR DATABASE [MaxSizeDB]

REPLICA ON N’ServerA\SQLX061′

WITH (ENDPOINT_URL = N’TCP://ServerA.domain.local:5022′, FAILOVER_MODE = AUTOMATIC, AVAILABILITY_MODE = SYNCHRONOUS_COMMIT, BACKUP_PRIORITY = 50,

SEEDING_MODE = AUTOMATIC, SECONDARY_ROLE(ALLOW_CONNECTIONS = NO)),

N’ServerB\SQLX061′

WITH (ENDPOINT_URL = N’TCP://ServerB.domain.local:5022′, FAILOVER_MODE = AUTOMATIC, AVAILABILITY_MODE = SYNCHRONOUS_COMMIT,

BACKUP_PRIORITY = 50, SEEDING_MODE = AUTOMATIC, SECONDARY_ROLE(ALLOW_CONNECTIONS = NO));

GO

–Server B

ALTER AVAILABILITY GROUP [AGTest] JOIN;

GO

ALTER AVAILABILITY GROUP [AGTest] GRANT CREATE ANY DATABASE;

GO

–Server A

USE MaxSizeDB

GO

CREATE TABLE foo (bar CHAR(8000))

GO

–fill to ~MAXSIZE

INSERT dbo.foo VALUES(N’1′)

GO 656

–Run as many times a ‘Maximum failures in specified period’ policy value in WSFC + 1 (default is 1 + 1)

–You might need to connecte to other replica

WHILE (1 = 1)

BEGIN

BEGIN TRY

INSERT dbo.foo VALUES(N’1′)

END TRY

BEGIN CATCH

END CATCH

WAITFOR DELAY ’00:00:01′

END;

UPDATE 2018-11-20: I have submitted this issue to MS Azure Feedback

UPDATE 2018-11-21: A discussion with Tiger team showed, they don’t consider this behavior as a bug, so we can’t expect a fix for this. As I don’t agree with it – my reasons for that are written in a summary paragraph of the blog – I am fully respecting their opinion.